Data Pipelines and Data Warehouses Build on a Modern Technology Stack

Switch from a cloud box reporting system to self-managed Google Cloud-based design. Modularize and simplify your architechture to increase transparency, utility and growth.

Modular Design that will Scale with your Business

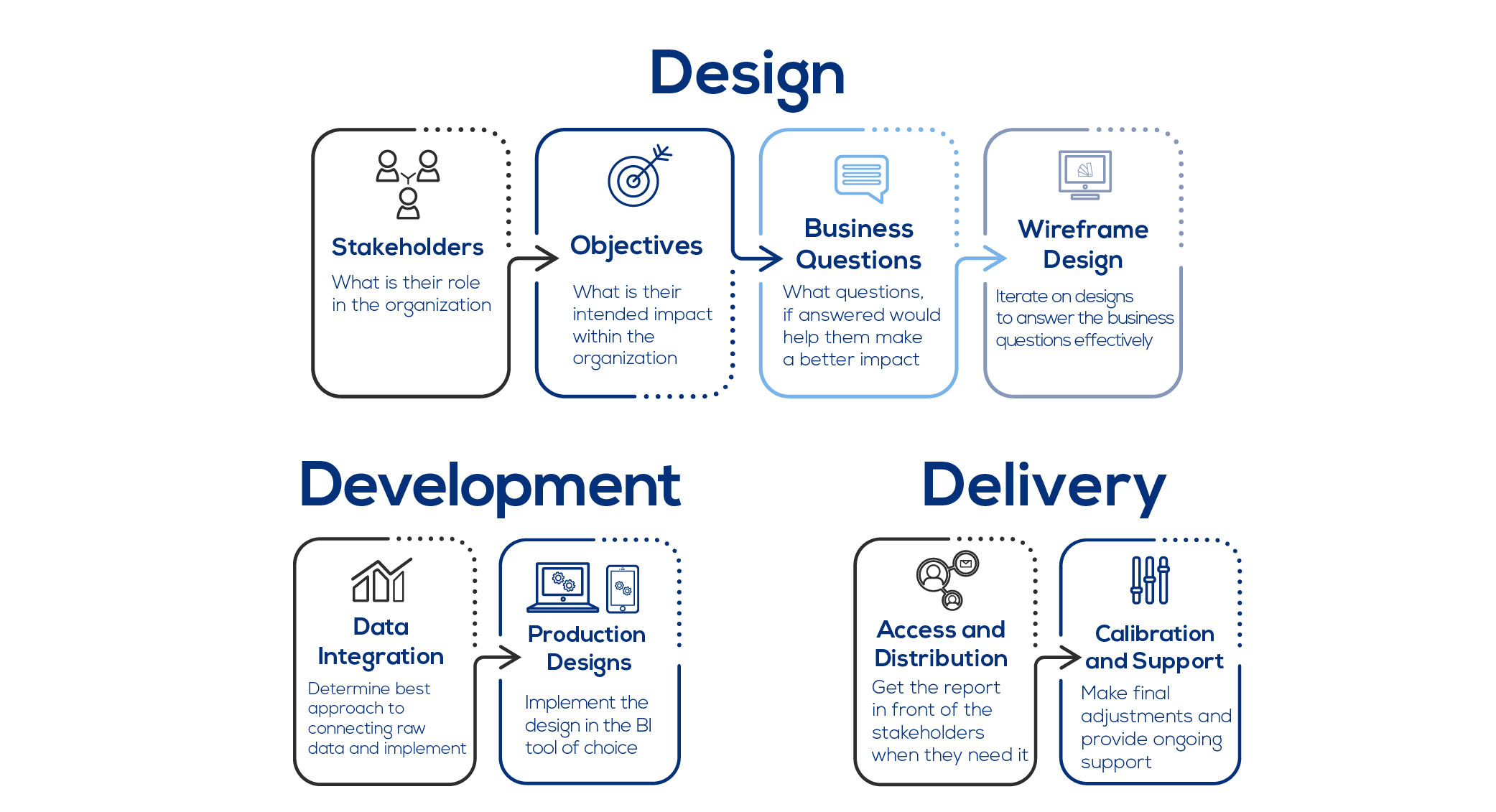

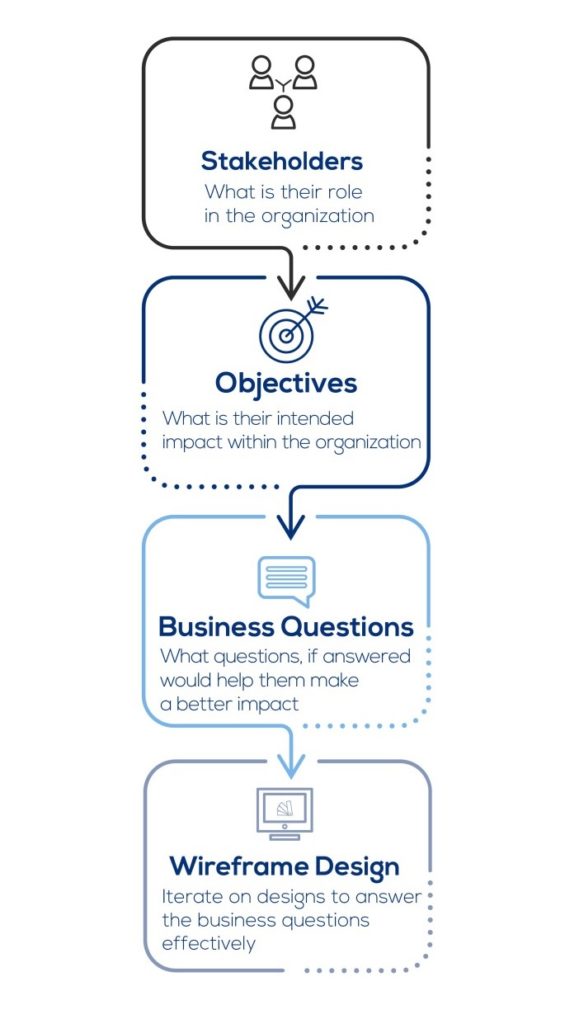

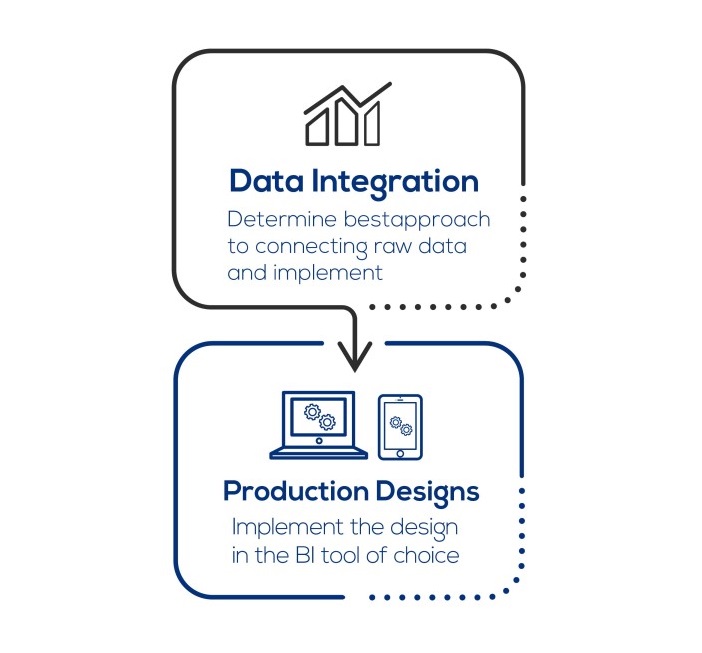

Design

Development

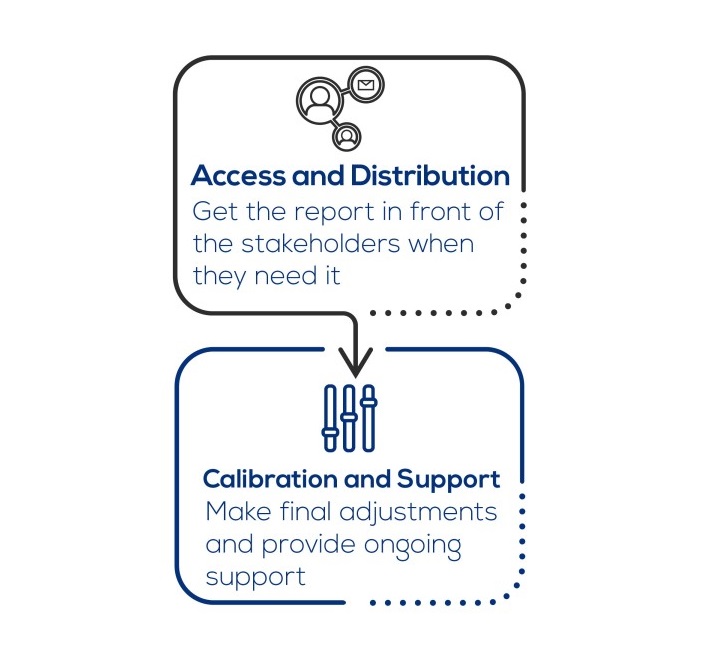

Delivery

FAQ

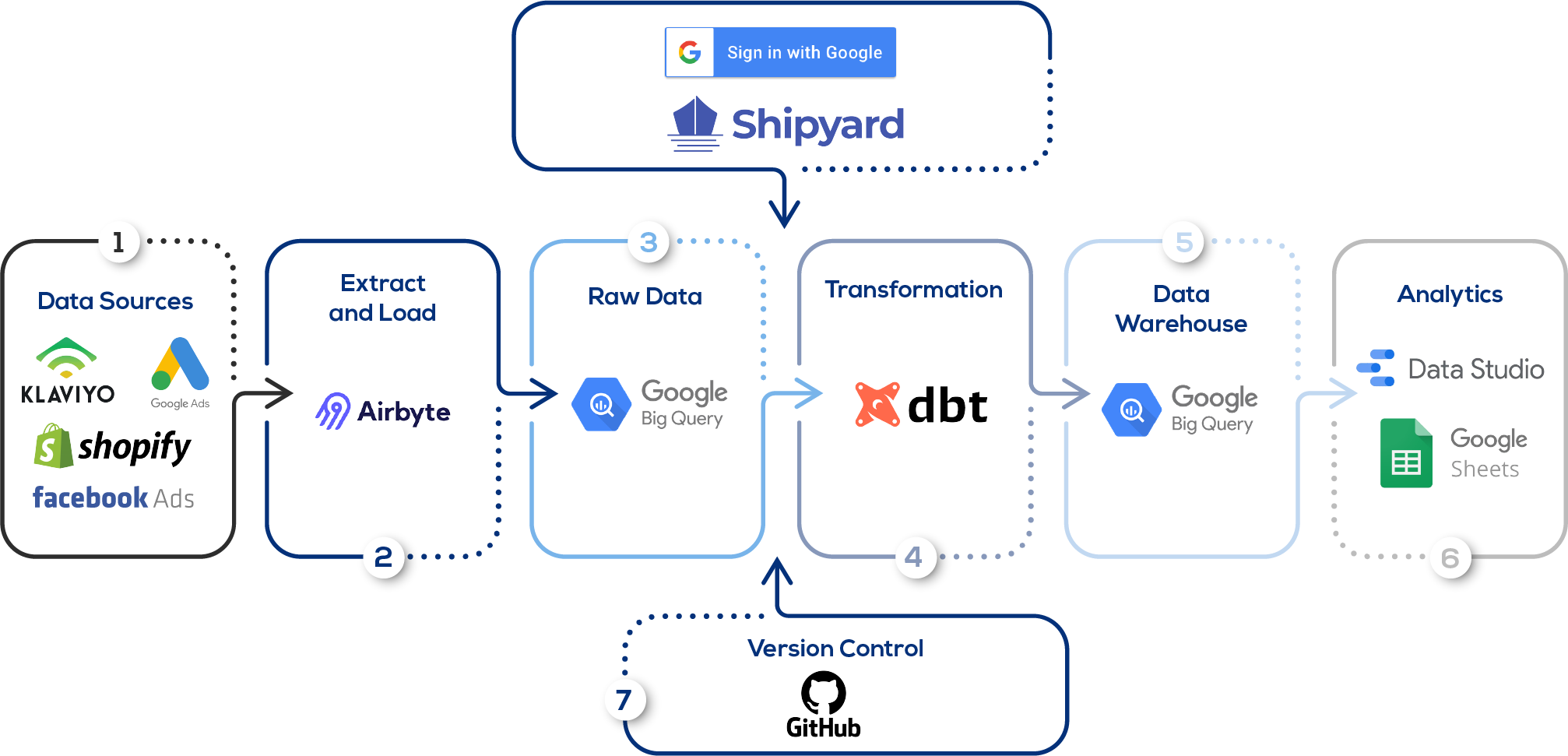

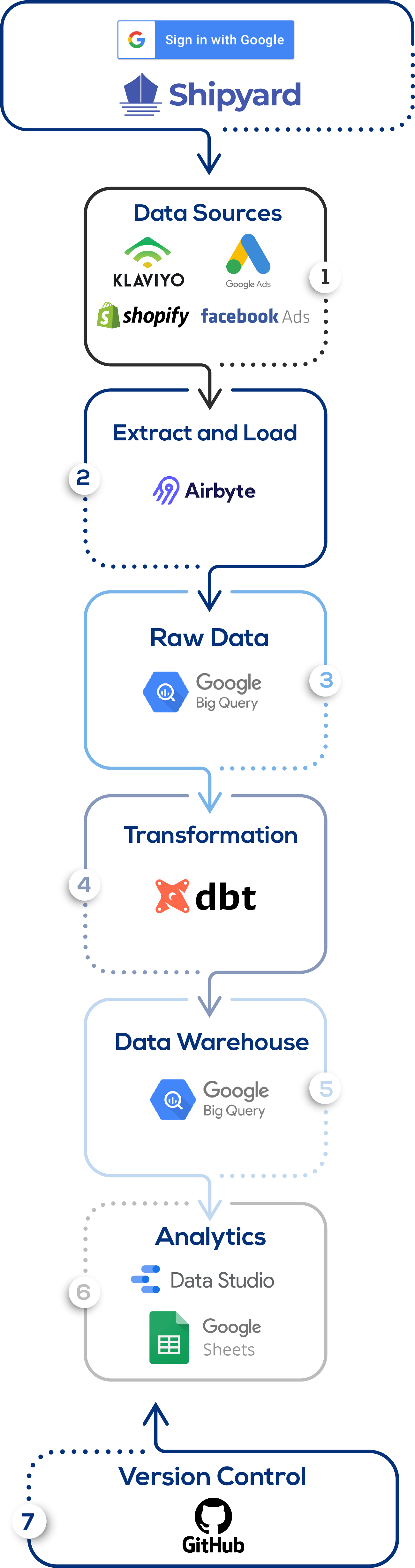

A data pipeline describes the entire process of taking data from its source system to a user facing report. This typically included a "Extract and Load" process, a cloud data warehouse, "Data Transformation", and some connection to a business application.

We work with most databases and cloud providers, including but not limited to PostgresSQL, MySQL, BigQuery, Redshift, Azure and Snowflake.

You definitely can use a Saas tool like SuperMetrics, Stitch or Airbyte. However these are typically just one step in the architecture. This data will often need to be transformed to work any reporting tools which is where the data warehouse and transformation steps become relevant.